Robots are playing many roles in the coronavirus crisis – and offering lessons for future disasters

A cylindrical robot rolls into a treatment room to allow health care workers to remotely take temperatures and measure blood pressure and oxygen saturation from patients hooked up to a ventilator. Another robot that looks like a pair of large fluorescent lights rotated vertically travels throughout a hospital disinfecting with ultraviolet light. Meanwhile a cart-like robot brings food to people quarantined in a 16-story hotel. Outside, quadcopter drones ferry test samples to laboratories and watch for violations of stay-at-home restrictions.

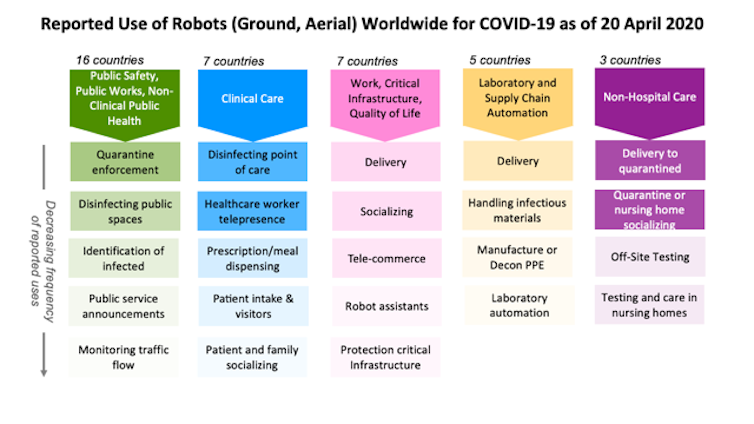

These are just a few of the two dozen ways robots have been used during the COVID-19 pandemic, from health care in and out of hospitals, automation of testing, supporting public safety and public works, to continuing daily work and life.

The lessons they’re teaching for the future are the same lessons learned at previous disasters but quickly forgotten as interest and funding faded. The best robots for a disaster are the robots, like those in these examples, that already exist in the health care and public safety sectors.

Research laboratories and startups are creating new robots, including one designed to allow health care workers to remotely take blood samples and perform mouth swabs. These prototypes are unlikely to make a difference now. However, the robots under development could make a difference in future disasters if momentum for robotics research continues.

Robots around the world

As roboticists at Texas A&M University and the Center for Robot-Assisted Search and Rescue, we examined over 120 press and social media reports from China, the U.S. and 19 other countries about how robots are being used during the COVID-19 pandemic. We found that ground and aerial robots are playing a notable role in almost every aspect of managing the crisis.

In hospitals, doctors and nurses, family members and even receptionists are using robots to interact in real time with patients from a safe distance. Specialized robots are disinfecting rooms and delivering meals or prescriptions, handling the hidden extra work associated with a surge in patients. Delivery robots are transporting infectious samples to laboratories for testing.

Outside of hospitals, public works and public safety departments are using robots to spray disinfectant throughout public spaces. Drones are providing thermal imagery to help identify infected citizens and enforce quarantines and social distancing restrictions. Robots are even rolling through crowds, broadcasting public service messages about the virus and social distancing.

At work and home, robots are assisting in surprising ways. Realtors are teleoperating robots to show properties from the safety of their own homes. Workers building a new hospital in China were able work through the night because drones carried lighting. In Japan, students used robots to walk the stage for graduation, and in Cyprus, a person used a drone to walk his dog without violating stay-at-home restrictions.

Helping workers, not replacing them

Every disaster is different, but the experience of using robots for the COVID-19 pandemic presents an opportunity to finally learn three lessons documented over the past 20 years. One important lesson is that during a disaster robots do not replace people. They either perform tasks that a person could not do or do safely, or take on tasks that free up responders to handle the increased workload.

The majority of robots being used in hospitals treating COVID-19 patients have not replaced health care professionals. These robots are teleoperated, enabling the health care workers to apply their expertise and compassion to sick and isolated patients remotely.

A small number of robots are autonomous, such as the popular UVD decontamination robots and meal and prescription carts. But the reports indicate that the robots are not displacing workers. Instead, the robots are helping the existing hospital staff cope with the surge in infectious patients. The decontamination robots disinfect better and faster than human cleaners, while the carts reduce the amount of time and personal protective equipment nurses and aides must spend on ancillary tasks.

Off-the-shelf over prototypes

The second lesson is the robots used during an emergency are usually already in common use before the disaster. Technologists often rush out well-intentioned prototypes, but during an emergency, responders – health care workers and search-and-rescue teams – are too busy and stressed to learn to use something new and unfamiliar. They typically can’t absorb the unanticipated tasks and procedures, like having to frequently reboot or change batteries, that usually accompany new technology.

Fortunately, responders adopt technologies that their peers have used extensively and shown to work. For example, decontamination robots were already in daily use at many locations for preventing hospital-acquired infections. Sometimes responders also adapt existing robots. For example, agricultural drones designed for spraying pesticides in open fields are being adapted for spraying disinfectants in crowded urban cityscapes in China and India.

A third lesson follows from the second. Repurposing existing robots is generally more effective than building specialized prototypes. Building a new, specialized robot for a task takes years. Imagine trying to build a new kind of automobile from scratch. Even if such a car could be quickly designed and manufactured, only a few cars would be produced at first and they would likely lack the reliability, ease of use and safety that comes from months or years of feedback from continuous use.

Alternatively, a faster and more scalable approach is to modify existing cars or trucks. This is how robots are being configured for COVID-19 applications. For example, responders began using the thermal cameras already on bomb squad robots and drones – common in most large cities – to detect infected citizens running a high fever. While the jury is still out on whether thermal imaging is effective, the point is that existing public safety robots were rapidly repurposed for public health.

Don’t stockpile robots

The broad use of robots for COVID-19 is a strong indication that the health care system needed more robots, just like it needed more of everyday items such as personal protective equipment and ventilators. But while storing caches of hospital supplies makes sense, storing a cache of specialized robots for use in a future emergency does not.

This was the strategy of the nuclear power industry, and it failed during the Fukushima Daiichi nuclear accident. The robots stored by the Japanese Atomic Energy Agency for an emergency were outdated, and the operators were rusty or no longer employed. Instead, the Tokyo Electric Power Company lost valuable time acquiring and deploying commercial off-the-shelf bomb squad robots, which were in routine use throughout the world. While the commercial robots were not perfect for dealing with a radiological emergency, they were good enough and cheap enough for dozens of robots to be used throughout the facility.

Robots in future pandemics

Hopefully, COVID-19 will accelerate the adoption of existing robots and their adaptation to new niches, but it might also lead to new robots. Laboratory and supply chain automation is emerging as an overlooked opportunity. Automating the slow COVID-19 test processing that relies on a small set of labs and specially trained workers would eliminate some of the delays currently being experienced in many parts of the U.S.

Automation is not particularly exciting, but just like the unglamorous disinfecting robots in use now, it is a valuable application. If government and industry have finally learned the lessons from previous disasters, more mundane robots will be ready to work side by side with the health care workers on the front lines when the next pandemic arrives.

[You’re too busy to read everything. We get it. That’s why we’ve got a weekly newsletter. Sign up for good Sunday reading. ]![]()

Robin R. Murphy, Raytheon Professor of Computer Science and Engineering; Vice-President Center for Robot-Assisted Search and Rescue (nfp), Texas A&M University ; Justin Adams, President of the Center for Robot-Assisted Search and Rescue/Research Fellow - The Center for Disaster Risk Policy, Florida State University, and Vignesh Babu Manjunath Gandudi, Graduate Teaching Assistant, Texas A&M University

This article is republished from The Conversation under a Creative Commons license. Read the original article.