To safely explore the solar system and beyond, spaceships need to go faster – nuclear-powered rockets may be the answer

With dreams of Mars on the minds of both NASA and Elon Musk, long-distance crewed missions through space are coming. But you might be surprised to learn that modern rockets don’t go all that much faster than the rockets of the past.

There are a lot of reasons that a faster spaceship is a better one, and nuclear-powered rockets are a way to do this. They offer many benefits over traditional fuel-burning rockets or modern solar-powered electric rockets, but there have been only eight U.S. space launches carrying nuclear reactors in the last 40 years.

However, last year the laws regulating nuclear space flights changed and work has already begun on this next generation of rockets.

Why the need for speed?

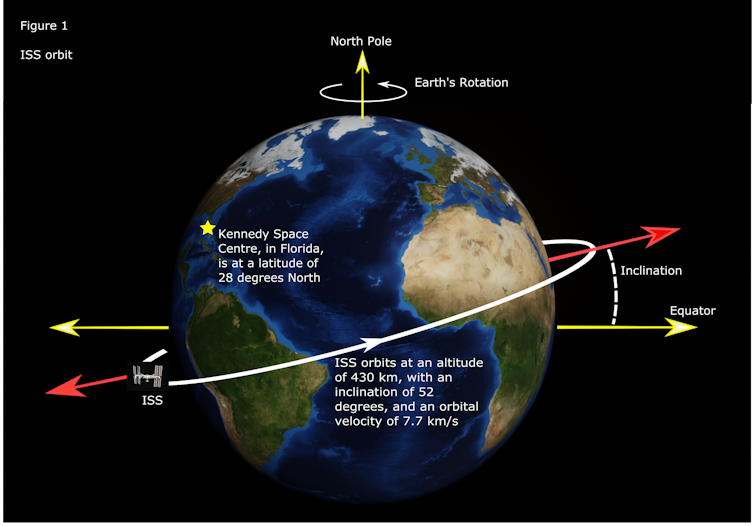

The first step of a space journey involves the use of launch rockets to get a ship into orbit. These are the large fuel-burning engines people imagine when they think of rocket launches and are not likely to go away in the foreseeable future due to the constraints of gravity.

It is once a ship reaches space that things get interesting. To escape Earth’s gravity and reach deep space destinations, ships need additional acceleration. This is where nuclear systems come into play. If astronauts want to explore anything farther than the Moon and perhaps Mars, they are going to need to be going very very fast. Space is massive, and everything is far away.

There are two reasons faster rockets are better for long-distance space travel: safety and time.

Astronauts on a trip to Mars would be exposed to very high levels of radiation which can cause serious long-term health problems such as cancer and sterility. Radiation shielding can help, but it is extremely heavy, and the longer the mission, the more shielding is needed. A better way to reduce radiation exposure is to simply get where you are going quicker.

But human safety isn’t the only benefit. As space agencies probe farther out into space, it is important to get data from unmanned missions as soon as possible. It took Voyager-2 12 years just to reach Neptune, where it snapped some incredible photos as it flew by. If Voyager-2 had a faster propulsion system, astronomers could have had those photos and the information they contained years earlier.

Speed is good. But why are nuclear systems faster?

Systems of today

Once a ship has escaped Earth’s gravity, there are three important aspects to consider when comparing any propulsion system:

- Thrust – how fast a system can accelerate a ship

- Mass efficiency – how much thrust a system can produce for a given amount of fuel

- Energy density – how much energy a given amount of fuel can produce

Today, the most common propulsion systems in use are chemical propulsion – that is, regular fuel-burning rockets – and solar-powered electric propulsion systems.

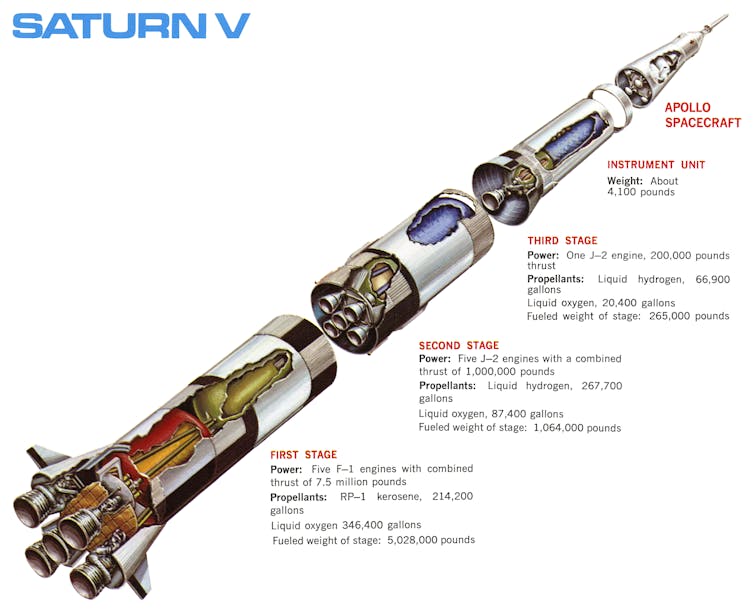

Chemical propulsion systems provide a lot of thrust, but chemical rockets aren’t particularly efficient, and rocket fuel isn’t that energy-dense. The Saturn V rocket that took astronauts to the Moon produced 35 million Newtons of force at liftoff and carried 950,000 gallons of fuel. While most of the fuel was used in getting the rocket into orbit, the limitations are apparent: It takes a lot of heavy fuel to get anywhere.

Electric propulsion systems generate thrust using electricity produced from solar panels. The most common way to do this is to use an electrical field to accelerate ions, such as in the Hall thruster. These devices are commonly used to power satellites and can have more than five times higher mass efficiency than chemical systems. But they produce much less thrust – about three Newtons, or only enough to accelerate a car from 0-60 mph in about two and a half hours. The energy source – the Sun – is essentially infinite but becomes less useful the farther away from the Sun the ship gets.

One of the reasons nuclear-powered rockets are promising is because they offer incredible energy density. The uranium fuel used in nuclear reactors has an energy density that is 4 million times higher than hydrazine, a typical chemical rocket propellant. It is much easier to get a small amount of uranium to space than hundreds of thousands of gallons of fuel.

So what about thrust and mass efficiency?

Two options for nuclear

Engineers have designed two main types of nuclear systems for space travel.

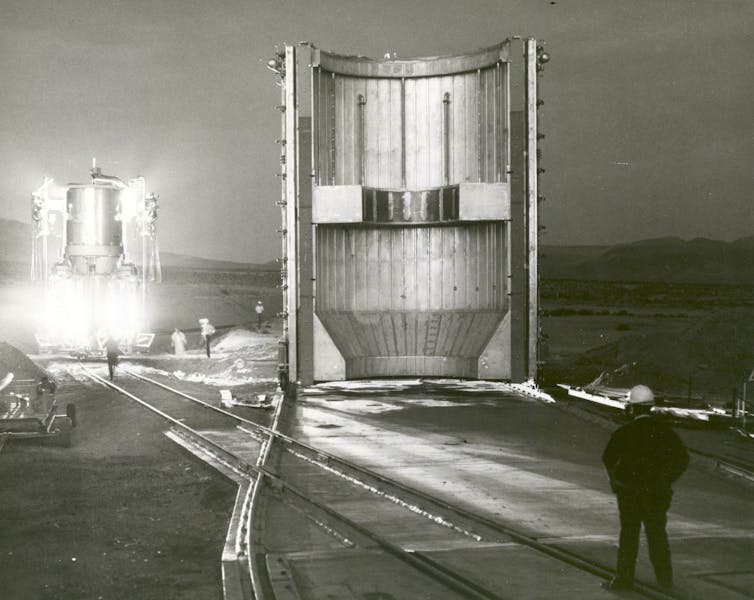

The first is called nuclear thermal propulsion. These systems are very powerful and moderately efficient. They use a small nuclear fission reactor – similar to those found in nuclear submarines – to heat a gas, such as hydrogen, and that gas is then accelerated through a rocket nozzle to provide thrust. Engineers from NASA estimate that a mission to Mars powered by nuclear thermal propulsion would be 20%-25% shorter than a trip on a chemical-powered rocket.

Nuclear thermal propulsion systems are more than twice as efficient as chemical propulsion systems – meaning they generate twice as much thrust using the same amount of propellant mass – and can deliver 100,000 Newtons of thrust. That’s enough force to get a car from 0-60 mph in about a quarter of a second.

The second nuclear-based rocket system is called nuclear electric propulsion. No nuclear electric systems have been built yet, but the idea is to use a high-power fission reactor to generate electricity that would then power an electrical propulsion system like a Hall thruster. This would be very efficient, about three times better than a nuclear thermal propulsion system. Since the nuclear reactor could create a lot of power, many individual electric thrusters could be operated simultaneously to generate a good amount of thrust.

Nuclear electric systems would be the best choice for extremely long-range missions because they don’t require solar energy, have very high efficiency and can give relatively high thrust. But while nuclear electric rockets are extremely promising, there are still a lot of technical problems to solve before they are put into use.

Why aren’t there nuclear powered rockets yet?

Nuclear thermal propulsion systems have been studied since the 1960s but have not yet flown in space.

Regulations first imposed in the U.S. in the 1970s essentially required case-by-case examination and approval of any nuclear space project from multiple government agencies and explicit approval from the president. Along with a lack of funding for nuclear rocket system research, this environment prevented further improvement of nuclear reactors for use in space.

That all changed when the Trump administration issued a presidential memorandum in August 2019. While upholding the need to keep nuclear launches as safe as possible, the new directive allows for nuclear missions with lower amounts of nuclear material to skip the multi-agency approval process. Only the sponsoring agency, like NASA, for example, needs to certify that the mission meets safety recommendations. Larger nuclear missions would go through the same process as before.

Along with this revision of regulations, NASA received US$100 million in the 2019 budget to develop nuclear thermal propulsion. DARPA is also developing a space nuclear thermal propulsion system to enable national security operations beyond Earth orbit.

After 60 years of stagnation, it’s possible a nuclear-powered rocket will be heading to space within a decade. This exciting achievement will usher in a new era of space exploration. People will go to Mars and science experiments will make new discoveries all across our solar system and beyond.

[You’re too busy to read everything. We get it. That’s why we’ve got a weekly newsletter. Sign up for good Sunday reading. ]![]()

Iain Boyd, Professor of Aerospace Engineering Sciences, University of Colorado Boulder

This article is republished from The Conversation under a Creative Commons license. Read the original article.